Today I discovered a ridiculously easy way to run a Druid cluster on GCP: flick a switch when creating a Cloud Dataproc cluster. It’s even a recent version (0.17 at time of writing).

Great, right? (Assuming you don’t mind using something labelled alpha by Google.)

Customisation

There is literally no documentation other than the page I stumbled across: Cloud Dataproc Druid Component.

After running up a small cluster, I noticed some things were missing:

- the Druid router process contains a great console which makes one-off ingests easy to achieve. Unfortunately, this is not enabled.

- the

druid-google-extensionsextension is not included, meaning the cluster cannot load files from GCS. (It is possible that the GCS Hadoop connector would meangs://URIs work, although I didn’t try this.)

Luckily, Cloud Dataproc provides a mechanism called initialisation actions for customising nodes. These are nothing more than scripts that each node pulls from GCS and executes. I created two scripts to rectify the above.

enable-druid-router.shcreates a config file and systemd unit for the router process; this means the master node will listen on port 8888. Gistenable-google-extensions.shappends a differentdruid.extensions.loadListline in the common properties file, enabling GCP ingest support. Gist

Nothing too out of the ordinary.

Running it

Anyone who is famiilar with Druid will know that it can take a bit of effort to learn and configure well. For a simple cluster, this can be reduced to almost a one-liner.

gcloud dataproc clusters create druid-example \

--region europe-west1 \

--subnet default \

--zone europe-west1-b \

--master-machine-type n1-standard-4 \

--master-boot-disk-size 500 \

--num-workers 2 \

--worker-machine-type n1-standard-4 \

--worker-boot-disk-size 500 \

--num-preemptible-workers 2 \

--image-version 1.5-debian10 \

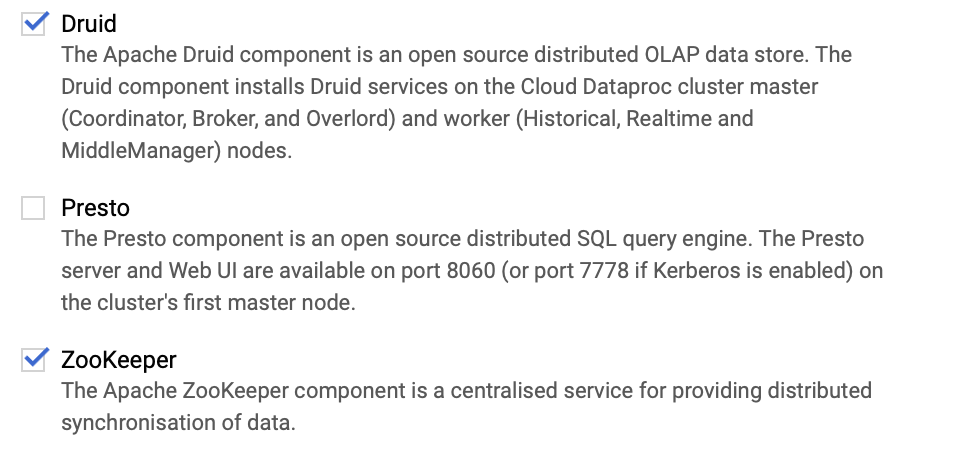

--optional-components DRUID,ZOOKEEPER \

--tags druid \

--project myproject \

--initialization-actions 'gs://your-dataproc-actions-bucket/enable-druid-router.sh'

Thoughts

The component is labelled alpha, so could vanish at any time. It isn’t really doing anything clever, it just means that Druid is part of the standard image for all Dataproc machines. It is ready to be used in a few minutes of provisioning a cluster.

The above initialisation actions are brittle because I’m assuming Google won’t change where they install Druid.

By default, the cluster uses storage HDFS rather than GCS or S3. This could be changed with yet another initialisation action.

You’re paying the Cloud Dataproc tax on top of the instances. This isn’t an excessive sum.

You’re running a ton of irrelevant Hadoopy stuff you don’t need or want. This can be uninstalled when bringing the cluster up. On the flip slide, Hadoop/YARN batch ingestion is setup and ready to go out of the box.

Druid Cluster metadata runs on a MySQL instance on the master node. Backup strategies for this are left as an exercise for the reader. Loss of cluster metadata is bad.

One of the nice things about Druid is the ability to scale out the various processes as required. This approach is opinionated: the master(s) run the query, broker, coordinator and overlord processes (as well as Zookeeper), the worker nodes serve as historical, middle manager and indexing processes.

That said, there still might be a lot of value in this simple setup. It’ll be interesting to see if Google evolve this component in the future. Even some customisation options would be great.